【一文打尽 ICLR 2018】9大演讲,DeepMind、谷歌最新干货抢鲜看

转载自 | 新智元

编辑 | 磐石

出品 | 磐创AI技术团队

来源 | iclr、Google/DeepMind blog

【磐创AI导读】:本文授权转载自新智元。主要介绍了ICLR2018相关亮点。欢迎大家点击上方蓝字关注我们的公众号:磐创AI。

ICLR 2018即将开幕,谷歌、DeepMind等大厂这几天陆续公布了今年的论文,全是干货。连同3篇最佳论文和9个邀请演讲一起,接下来将带来本届ICLR亮点的最全整理。

ICLR 2018即将在明天(当地时间4月30日)在温哥华开幕,谷歌、DeepMind等大厂这几天陆续公布了今年的论文,全是干货。本文将同时介绍3篇最佳论文一起。

ICLR 2018为期4天,5月3日结束。与往年一样,本次大会每天分上午下午两场。每场形式基本一样,先是邀请演讲(invited talk),然后是讨论,也就是被选为能够进行口头发表(Oral)的论文、茶歇、海报展示(poster)。

邀请演讲列表:

9个邀请演讲主题:

-

Erik Brynjolfsson:机器学习能做什么? 劳动力影响

-

Bernhard Schoelkopf:学习因果机制

-

Suchi Saria:通过机器学习将医疗个性化

-

Kristen Grauman:未标记的视频的视觉学习与环视策略

-

Koray Kavukcuoglu:从生成模型到生成agents

-

Blake Richards:深度学习与Neocortical Microcircuits

-

Daphne Koller:与Daphne Koller的炉边聊天

-

Joelle Pineau:深度强化学习中的可重复性,可重用性和鲁棒性

-

Christopher D Manning:一个可以推理的神经网络模型

大会主席

Yoshua Bengio,蒙特利尔大学

Yann LeCun,纽约大学&Facebook

高级程序主席

Tara Sainath,Google

程序主席

Iain Murray,爱丁堡大学

Marc’Aurelio Ranzato,Facebook

Oriol Vinyals,Google DeepMind

指导委员会

Aaron Courville,蒙特利尔大学

Hugo Larochelle,Google

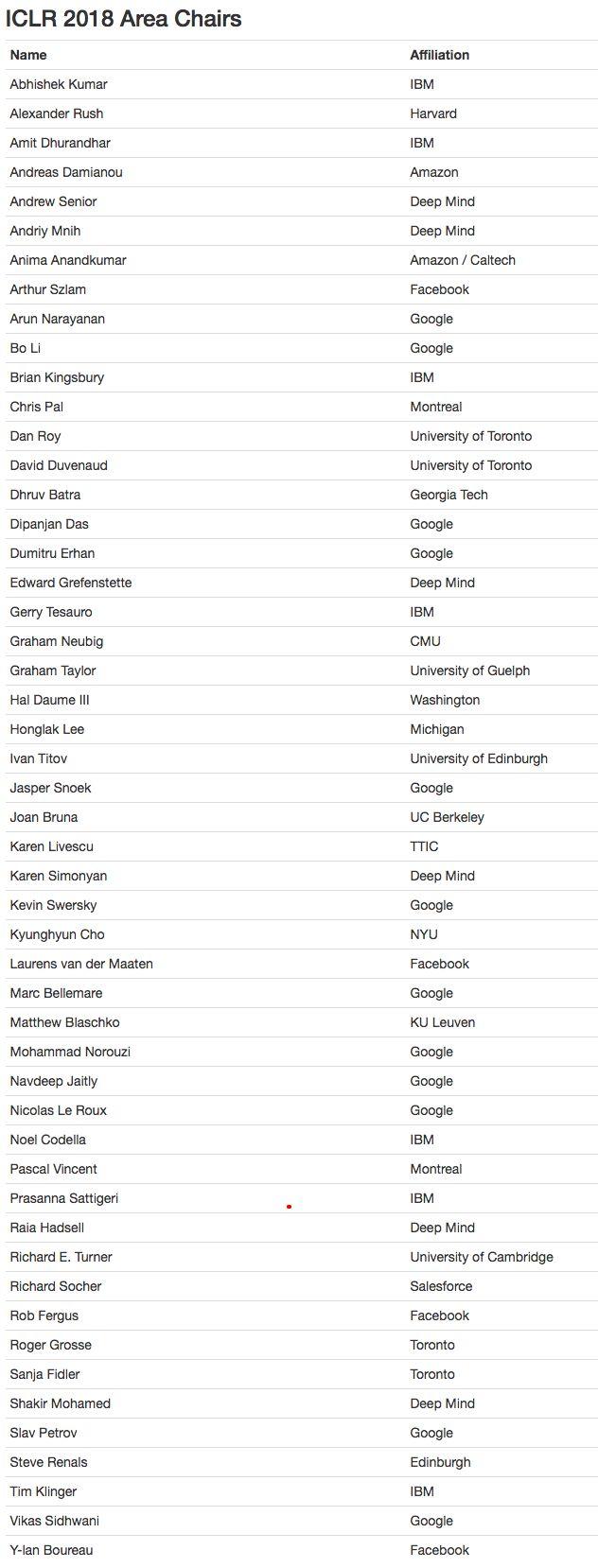

领域主席(Area Chairs)

最佳评审人:

Amir-massoud Farahmand,Andrew Owens,David Kale,George Philipp,Julien Cornebise,Michiel van de Panne,Tom Schaul,Yisong Yue

ICLR素有深度学习顶会“无冕之王”之称。Dataviz网站之前统计了今年的ICLR数据,有以下几个有意思的地方:

-

来自加州大学伯克利分校的Sergey Levine被接收论文数量最多;

-

大神Bengio提交论文数量最多;

-

谷歌的接收和提交论文数量都属机构第一;

-

英伟达的接收率排名第一;

-

提交和被接收论文数量,英国都独占鳌头;

-

中国是继英国之后,提交论文数量最多的国家。

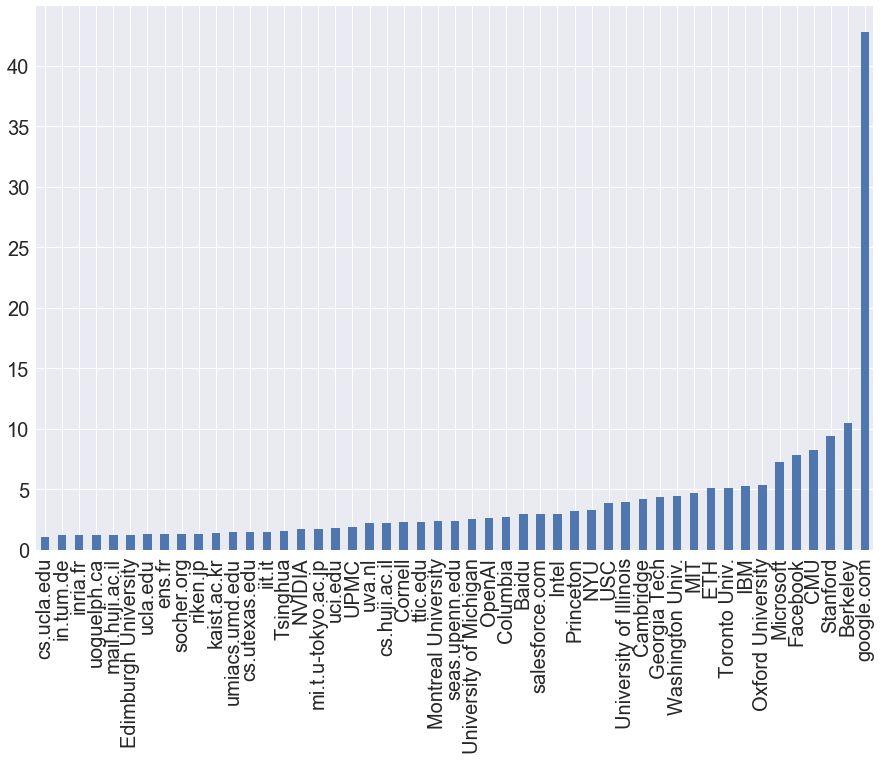

接收论文数量最多的机构:谷歌第一、伯克利第二、斯坦福第三

如果一篇论文的所有作者都来自同一个机构,该机构被算作写了一篇论文。如果三位作者中只有一位来自该机构,则认为该机构写了三分之一篇论文。

谷歌以3.33篇oral、42.78篇poster和0.56的接收率称霸榜首;伯克利名列第二,oral为2.56篇、poster为10.48篇,接收率为0.46;斯坦福排名第三,oral为1篇,poster为9.4篇,接收率为0.36。

前10名余下机构分别是:CMU、Facebook、微软、牛津大学、IBM、多伦多大学、ETH。

以下带来ICLR 2018的最佳论文的介绍,以及DeepMind和谷歌的论文概况。

论文下载地址:

https://deepmind.com/blog/deepmind-papers-iclr-2018/

https://research.googleblog.com/2018/04/google-at-iclr-2018.html

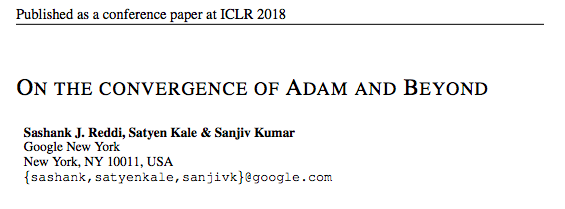

最佳论文1:

On the Convergence of Adam and Beyond

关于 Adam 算法收敛性及其改进方法的讨论

作者:Sashank J. Reddi, Satyen Kale, Sanjiv Kumar

本研究的贡献:

-

通过一个简单的凸优化问题阐述了TMSprop和Adam中使用的指数移动平均是如何导致不收敛的。而且文中的分析可以扩展到其他的指数移动平均打的方法上如Adadelta和NAdam。

-

为了保证算法的收敛,文中使用历史梯度的“长时记忆”。并指出了在以往论文Kingma&Ba(2015)中关于Adam收敛性证明过程中存在的问题。为了解决这个问题,文中提出了Adam的变体算法,算法在使用历史梯度的“长时记忆”的情况下,并没有增加算法的时间复杂度与空间复杂度。此外,文中还基于Kingma&Ba(2015)给出了Adam算法收敛性的分析。

-

提供了Adam算法变体的实验证明,结果表明,在某些常用的机器学习问题中,这个变体的表现算法相似或优于原始算法。

最佳论文2:

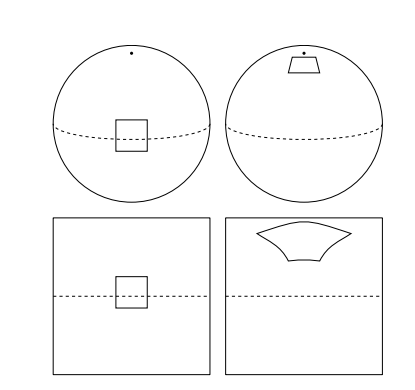

球形卷积神经网络(Spherical CNNs)

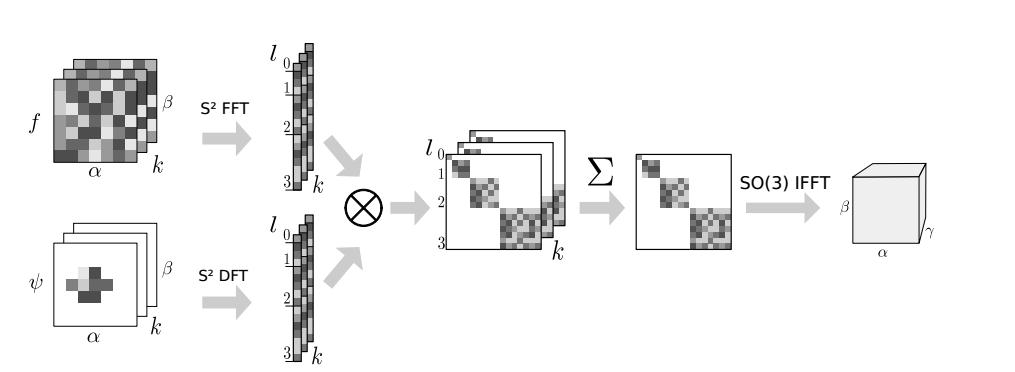

卷积神经网络(CNN)可以很好的处理二维平面图像的问题。然而,对球面图像进行处理需求日益增加。例如,对无人机、机器人、自动驾驶汽车、分子回归问题、全球天气和气候模型的全方位视觉处理问题。将球形信号的平面投影作为卷积神经网络的输入的这种天真做法是注定要失败的,如下图1所示,而这种投影引起的空间扭曲会导致CNN无法共享权重。

图1

这篇论文中介绍了如何构建球形CNN的模块,提出了利用广义傅里叶变换(FFT)进行快速群卷积(互相关)的操作。通过傅里叶变换来实现球形CNN的示意图如下所示:

最佳论文3:

Continuous Adaptation via Meta-learning in Nonstationary and Competitive Environments

在非固定和竞争环境中通过元学习进行持续性适应

在非平稳环境中不断学习和适应有限经验的能力是计算机通往真正的人工智能的重要里程碑。此文提出了“learning to learn”框架的持续性适应问题。通过设计一种基于梯度的元学习算法来对动态变化和对抗性场景的进行适应。此外,文中还设计了一种基于多智能体(multi-agent)的竞争环境:RoboSumo,并定义了适应性迭代游戏,用于从不同方面测试系统的持续适应性能。实验证明,元学习比在few-shot状态下的反应基线具有更强的适应能力,且适应于进行multi-agent学习和竞争。

实验中使用了三种模型作为智能体(agent),如图1(a) 所示。它们在解剖学上存在差异:腿的数量,位置,以及对大腿和膝关节的限制。下图表示非平稳运动环境。应用于红颜色的腿的扭矩是由一个动态变化的因素决定的。(c)用于表示 RoboSumo竞争环境。

最大后验策略优化

Maximum a posteriori policy optimisation

作者:Abbas Abdolmaleki, Jost Tobias Springenberg, Nicolas Heess, Yuval Tassa, Remi Munos

高效架构搜索的层次化表示

Hierarchical representations for efficient architecture search

作者:Hanxiao Liu (CMU), Karen Simonyan, Oriol Vinyals, Chrisantha Fernando, Koray Kavukcuoglu

一个可迁移机器人技能的嵌入空间学习

Learning an embedding space for transferable robot skills

作者:Karol Hausman, Jost Tobias Springenberg, Ziyu Wang, Nicolas Heess, Martin Riedmiller

有意识模型的学习

Learning awareness models

作者:Brandon Amos, Laurent Dinh, Serkan Cabi, Thomas Rothörl, Sergio Gómez Colmenarejo, Alistair M Muldal, Tom Erez, Yuval Tassa, Nando de Freitas, Misha Denil

重复神经网络的曲率近似法

Kronecker-factored curvature approximations for recurrent neural networks

作者:James Martens, Jimmy Ba (Vector Institute), Matthew Johnson (Google)

分布式分布确定性策略梯度

Distributed distributional deterministic policy gradients

作者:Gabriel Barth-maron, Matthew Hoffman, David Budden, Will Dabney, Daniel Horgan, Dhruva Tirumala Bukkapatnam, Alistair M Muldal, Nicolas Heess, Timothy Lillicrap

Kanerva机器:一个生成的分布式内存

The Kanerva Machine: A generative distributed memory

作者:Authors: Yan Wu, Greg Wayne, Alex Graves, Timothy Lillicrap

基于内存的参数适应

Memory-based parameter adaptation

作者:Pablo Sprechmann, Siddhant Jayakumar, Jack Rae, Alexander Pritzel, Adria P Badia · Benigno Uria, Oriol Vinyals, Demis Hassabis, Razvan Pascanu, Charles Blundell

SCAN:学习层次组合的视觉概念

SCAN: Learning hierarchical compositional visual concepts

作者:Irina Higgins, Nicolas Sonnerat, Loic Matthey, Arka Pal, Christopher P Burgess, Matko Bos ̌njak, Murray Shanahan, Matthew Botvinick, Alexander Lerchner

从带有符号和像素输入的引用游戏中出现语言通信

Emergence of linguistic communication from referential games with symbolic and pixel input

作者:Angeliki Lazaridou, Karl M Hermann, Karl Tuyls, Stephen Clark

通向平衡之路:GAN不需要在每一步中减少散度

Many paths to equilibrium: GANs do not need to decrease a divergence at every step

作者:William Fedus (Université de Montréal), Mihaela Rosca, Balaji Lakshminarayanan, Andrew Dai (Google), Shakir Mohamed, Ian Goodfellow (Google Brain)

神经网络能理解逻辑推理吗?

Can neural networks understand logical entailment?

作者:Richard Evans, David Saxton, David Amos, Pushmeet Kohli, Edward Grefenstette

分布式优先体验重现

Distributed prioritized experience replay

作者:Daniel Horgan, John Quan, David Budden, Gabriel Barth-maron, Matteo Hessel, Hado van Hasselt, David Silver

The Reactor:一个用于强化学习的快速、高效的表现“评论家“

The Reactor: A fast and sample-efficient actor-critic agent for reinforcement learning

作者:Audrunas Gruslys, Will Dabney, Mohammad Gheshlaghi Azar, Bilal Piot, Marc G Bellemare, Remi Munos

关于单一方向泛化的重要性

On the importance of single directions for generalization

作者:Ari Morcos, David GT Barrett, Neil C Rabinowitz, Matthew Botvinick

循环神经网络语言模型中的内存架构

Memory architectures in recurrent neural network language models

作者:Dani Yogatama, Yishu Miao, Gábor Melis, Wang Ling, Adhiguna Kuncoro, Chris Dyer, Phil Blunsom

few-shot自回归密度估计:学习如何学习分布

Few-shot autoregressive density estimation: Towards learning to learn distributions

作者:Scott Reed, Yutian Chen, Thomas Paine, Aaron van den Oord, S. M. Ali Eslami, Danilo J Rezende, Oriol Vinyals, Nando de Freitas

最优神经网络模型的评估

On the state of the art of evaluation in neural language models

作者:Gábor Melis, Chris Dyer, Phil Blunsom

通过谈判的紧急沟通

Emergent communication through negotiation

作者:Kris Cao, Angeliki Lazaridou, Marc Lanctot, Joel Z Leibo, Karl Tuyls, Stephen Clark

基于原始视觉输入的组合式通信学习

Compositional obverter communication learning from raw visual input

作者:Edward Choi, Angeliki Lazaridou, Nando de Freitas

噪声勘探网络

Noisy networks for exploration

作者:Meire Fortunato, Mohammad Gheshlaghi Azar, Bilal Piot, Jacob Menick, Matteo Hessel, Ian Osband, Alex Graves, Volodymyr Mnih, Remi Munos, Demis Hassabis, Olivier Pietquin, Charles Blundell, Shane Legg

在神经网络和深度学习技术创新的前沿,谷歌专注于理论和应用研究,开发用于理解和概括的学习方法。作为ICLR 2018的白金赞助商,谷歌将有超过130名研究人员参加组委会和研讨会,通过提交论文和海报,为更广泛的学术研究社区作出贡献和向其学习。

下面的列表是谷歌在ICLR 2018上展示的研究成果:

口头报告:

Wasserstein Auto-Encoders

Ilya Tolstikhin,Olivier Bousquet,Sylvain Gelly,Bernhard Scholkopf

On the Convergence of Adam and Beyond (Best Paper Award)

关于 Adam 算法收敛性及其改进方法的讨论(最佳论文奖)

作者:Sashank J. Reddi, Satyen Kale, Sanjiv Kumar

Ask the Right Questions: Active Question Reformulation with Reinforcement Learning

提出正确的问题:用强化学习激活问题的重构

作者:Christian Buck, Jannis Bulian, Massimiliano Ciaramita, Wojciech Gajewski, Andrea Gesmundo, Neil Houlsby, Wei Wang

Beyond Word Importance: Contextual Decompositions to Extract Interactions from LSTMs

超越单词重要性:在 LSTM 中用语境分解推断单词之间的相互作用

作者:W. James Murdoch, Peter J. Liu, Bin Yu

大会Poster列表:

Boosting the Actor with Dual Critic

Bo Dai, Albert Shaw, Niao He, Lihong Li, Le Song

MaskGAN: Better Text Generation via Filling in the _______

MaskGAN:通过填写_______更好地生成文本

William Fedus, Ian Goodfellow, Andrew M. Dai

Scalable Private Learning with PATE

用PATE进行可扩展的私人化学习

Nicolas Papernot, Shuang Song, Ilya Mironov, Ananth Raghunathan, Kunal Talwar, Ulfar Erlingsson

Deep Gradient Compression: Reducing the Communication Bandwidth for Distributed Training

深度梯度压缩:降低分布式训练的通信带宽

Yujun Lin, Song Han, Huizi Mao, Yu Wang, William J. Dally

Flipout: Efficient Pseudo-Independent Weight Perturbations on Mini-Batches

Flipout:Mini-Batches上的高效伪独立权重扰动

Yeming Wen, Paul Vicol, Jimmy Ba, Dustin Tran, Roger Grosse

Latent Constraints: Learning to Generate Conditionally from Unconditional Generative Models

潜在约束:学习从无条件生成模型实现有条件生成

Adam Roberts, Jesse Engel, Matt Hoffman

Multi-Mention Learning for Reading Comprehension with Neural Cascades

利用神经级联进行阅读理解的多义学习

Swabha Swayamdipta, Ankur P. Parikh, Tom Kwiatkowski

QANet: Combining Local Convolution with Global Self-Attention for Reading Comprehension

QANet:结合局部卷积与全局Self-Attention进行阅读理解

Adams Wei Yu, David Dohan, Thang Luong, Rui Zhao, Kai Chen, Mohammad Norouzi, Quoc V. Le

Sensitivity and Generalization in Neural Networks: An Empirical Study

神经网络的灵敏度与泛化:实证研究

Roman Novak, Yasaman Bahri, Daniel A. Abolafia, Jeffrey Pennington, Jascha Sohl-Dickstein

Action-dependent Control Variates for Policy Optimization via Stein Identity

通过Stein Identity进行策略优化的动作相关控制变量

Hao Liu, Yihao Feng, Yi Mao, Dengyong Zhou, Jian Peng, Qiang Liu

An Efficient Framework for Learning Sentence Representations

学习句子表示的一个有效框架

Lajanugen Logeswaran, Honglak Lee

Fidelity-Weighted Learning

Mostafa Dehghani, Arash Mehrjou, Stephan Gouws, Jaap Kamps, Bernhard Schölkopf

Generating Wikipedia by Summarizing Long Sequences

通过总结长序列生成维基百科

Peter J. Liu, Mohammad Saleh, Etienne Pot, Ben Goodrich, Ryan Sepassi, Lukasz Kaiser, Noam Shazeer

Matrix Capsules with EM Routing

Geoffrey Hinton, Sara Sabour, Nicholas Frosst

Temporal Difference Models: Model-Free Deep RL for Model-Based Control

时间差异模型:无模型深度RL用于基于模型的控制

Sergey Levine, Shixiang Gu, Murtaza Dalal, Vitchyr Pong

Deep Neural Networks as Gaussian Processes

作为高斯过程的深度神经网络

Jaehoon Lee, Yasaman Bahri, Roman Novak, Samuel L. Schoenholz, Jeffrey Pennington, Jascha Sohl-Dickstein

Many Paths to Equilibrium: GANs Do Not Need to Decrease a Divergence at Every Step

多路径平衡:GAN不需要逐步减少散度

William Fedus, Mihaela Rosca, Balaji Lakshminarayanan, Andrew M. Dai, Shakir Mohamed,Ian Goodfellow

Initialization Matters: Orthogonal Predictive State Recurrent Neural Networks

初始化问题:正交预测状态递归神经网络

Krzysztof Choromanski, Carlton Downey, Byron Boots

Learning Differentially Private Recurrent Language Models

H. Brendan McMahan, Daniel Ramage, Kunal Talwar, Li Zhang

Learning Latent Permutations with Gumbel-Sinkhorn Networks

用Gumbel-Sinkhorn网络学习潜在排列

Gonzalo Mena, David Belanger, Scott Linderman, Jasper Snoek

Leave no Trace: Learning to Reset for Safe and Autonomous Reinforcement Learning

不留痕迹:学习重置以实现安全和自主的强化学习

Benjamin Eysenbach, Shixiang Gu, Julian Ibarz, Sergey Levine

Meta-Learning for Semi-Supervised Few-Shot Classification

用于半监督的Few-Shot分类的元学习

Mengye Ren, Eleni Triantafillou, Sachin Ravi, Jake Snell, Kevin Swersky, Josh Tenenbaum,Hugo Larochelle, Richard Zemel

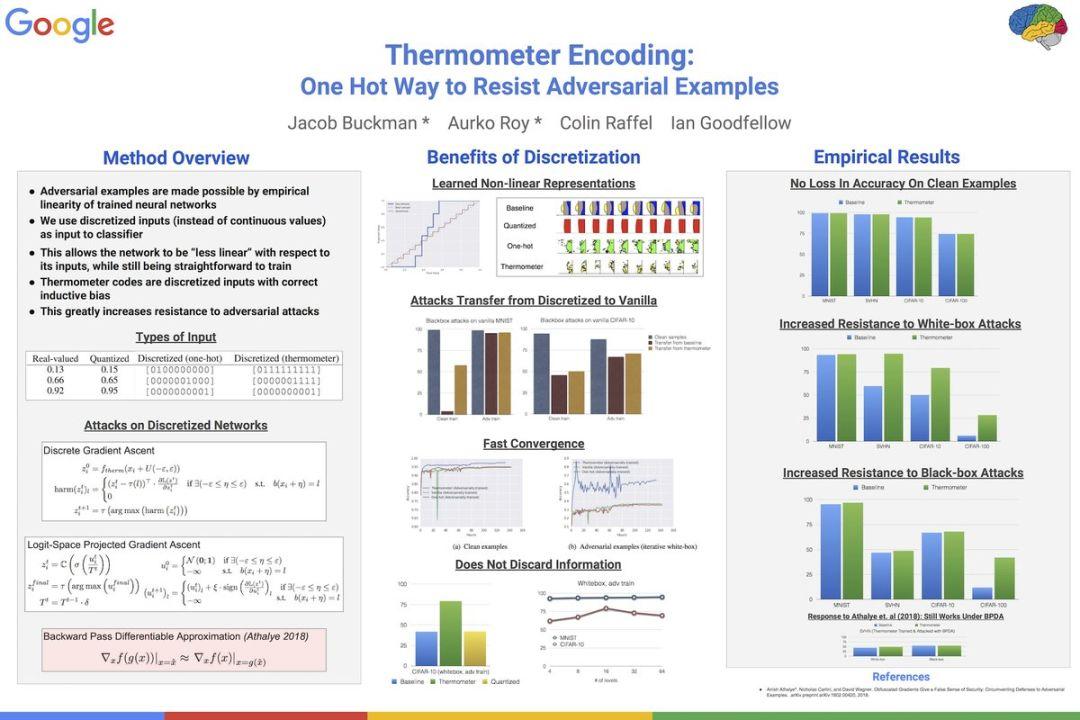

Thermometer Encoding: One Hot Way to Resist Adversarial Examples

Jacob Buckman, Aurko Roy, Colin Raffel, Ian Goodfellow

A Hierarchical Model for Device Placement

设备布局的分层模型

Azalia Mirhoseini, Anna Goldie, Hieu Pham, Benoit Steiner, Quoc V. Le, Jeff Dean

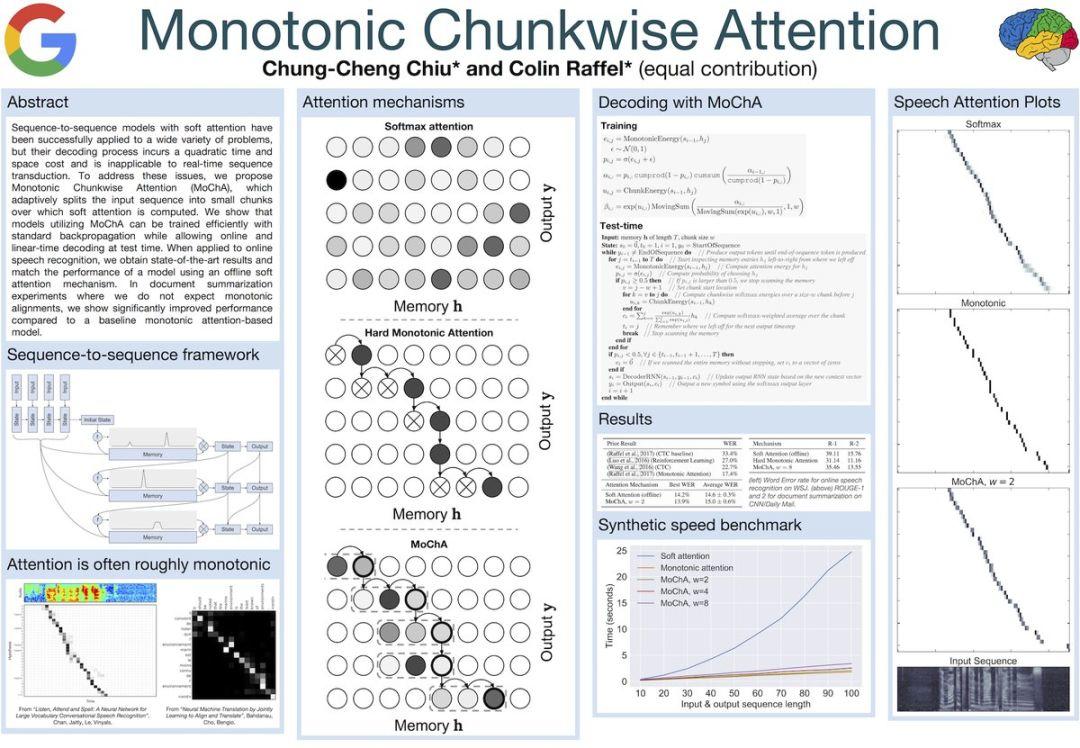

Monotonic Chunkwise Attention

Chung-Cheng Chiu, Colin Raffel

Training Confidence-calibrated Classifiers for Detecting Out-of-Distribution Samples

训练置信度校准分类器用于检测超出分配样本

Kimin Lee, Honglak Lee, Kibok Lee, Jinwoo Shin

Trust-PCL: An Off-Policy Trust Region Method for Continuous Control

Trust-PCL:用于连续控制的 Off-Policy 信任域方法

Ofir Nachum, Mohammad Norouzi, Kelvin Xu, Dale Schuurmans

Ensemble Adversarial Training: Attacks and Defenses

Ensemble对抗训练:攻击和防御

Florian Tramèr, Alexey Kurakin, Nicolas Papernot, Ian Goodfellow, Dan Boneh, Patrick McDaniel

Stochastic Variational Video Prediction

随机变分视频预测

Mohammad Babaeizadeh, Chelsea Finn, Dumitru Erhan, Roy Campbell, Sergey Levine

Depthwise Separable Convolutions for Neural Machine Translation

神经机器翻译的深度可分卷积

Lukasz Kaiser, Aidan N. Gomez, Francois Chollet

Don’t Decay the Learning Rate, Increase the Batch Size

Samuel L. Smith, Pieter-Jan Kindermans, Chris Ying, Quoc V. Le

Generative Models of Visually Grounded Imagination

Ramakrishna Vedantam, Ian Fischer, Jonathan Huang, Kevin Murphy

Large Scale Distributed Neural Network Training through Online Distillation

通过 Online Distillation 进行大规模分布式神经网络训练

Rohan Anil, Gabriel Pereyra, Alexandre Passos, Robert Ormandi, George E. Dahl, Geoffrey E. Hinton

Learning a Neural Response Metric for Retinal Prosthesis

Nishal P. Shah, Sasidhar Madugula, Alan Litke, Alexander Sher, EJ Chichilnisky, Yoram Singer,Jonathon Shlens

Neumann Optimizer: A Practical Optimization Algorithm for Deep Neural Networks

Neumann优化器:一种用于深度神经网络的实用优化算法

Shankar Krishnan, Ying Xiao, Rif A. Saurous

A Neural Representation of Sketch Drawings

素描图的神经表示

David Ha, Douglas Eck

Deep Bayesian Bandits Showdown: An Empirical Comparison of Bayesian Deep Networks for Thompson Sampling

Carlos Riquelme, George Tucker, Jasper Snoek

Generalizing Hamiltonian Monte Carlo with Neural Networks

Daniel Levy, Matthew D. Hoffman, Jascha Sohl-Dickstein

Leveraging Grammar and Reinforcement Learning for Neural Program Synthesis

利用语法和强化学习进行神经程序综合

Rudy Bunel, Matthew Hausknecht, Jacob Devlin, Rishabh Singh, Pushmeet Kohli

On the Discrimination-Generalization Tradeoff in GANs

论GAN中的Discrimination-Generalization权衡

Pengchuan Zhang, Qiang Liu, Dengyong Zhou, Tao Xu, Xiaodong He

A Bayesian Perspective on Generalization and Stochastic Gradient Descent

关于泛化和随机梯度下降的贝叶斯观点

Samuel L. Smith, Quoc V. Le

Learning how to Explain Neural Networks: PatternNet and PatternAttribution

学习如何解释神经网络:PatternNet和PatternAttribution

Pieter-Jan Kindermans, Kristof T. Schütt, Maximilian Alber, Klaus-Robert Müller, Dumitru Erhan, Been Kim, Sven Dähne

Skip RNN: Learning to Skip State Updates in Recurrent Neural Networks

Skip RNN:学习在递归神经网络中跳过状态更新

Víctor Campos, Brendan Jou, Xavier Giró-i-Nieto, Jordi Torres, Shih-Fu Chang

Towards Neural Phrase-based Machine Translation

基于神经短语的机器翻译

Po-Sen Huang, Chong Wang, Sitao Huang, Dengyong Zhou, Li Deng

Unsupervised Cipher Cracking Using Discrete GANs

使用离散GAN的无监督密码破译

Aidan N. Gomez, Sicong Huang, Ivan Zhang, Bryan M. Li, Muhammad Osama, Lukasz Kaiser

Variational Image Compression With A Scale Hyperprior

利用一个Scale Hyperprior进行变分图像压缩

Johannes Ballé, David Minnen, Saurabh Singh, Sung Jin Hwang, Nick Johnston

Workshop Posters列表

Local Explanation Methods for Deep Neural Networks Lack Sensitivity to Parameter Values

Julius Adebayo, Justin Gilmer, Ian Goodfellow, Been Kim

Stoachastic Gradient Langevin Dynamics that Exploit Neural Network Structure

Zachary Nado, Jasper Snoek, Bowen Xu, Roger Grosse, David Duvenaud, James Martens

Towards Mixed-initiative generation of multi-channel sequential structure

Anna Huang, Sherol Chen, Mark J. Nelson, Douglas Eck

Can Deep Reinforcement Learning Solve Erdos-Selfridge-Spencer Games?

Maithra Raghu, Alex Irpan, Jacob Andreas, Robert Kleinberg, Quoc V. Le, Jon Kleinberg

GILBO: One Metric to Measure Them All

Alexander Alemi, Ian Fischer

HoME: a Household Multimodal Environment

Simon Brodeur, Ethan Perez, Ankesh Anand, Florian Golemo, Luca Celotti, Florian Strub, Jean Rouat, Hugo Larochelle, Aaron Courville

Learning to Learn without Labels

Luke Metz, Niru Maheswaranathan, Brian Cheung, Jascha Sohl-Dickstein

Learning via Social Awareness: Improving Sketch Representations with Facial Feedback

Natasha Jaques, Jesse Engel, David Ha, Fred Bertsch, Rosalind Picard, Douglas Eck

Negative Eigenvalues of the Hessian in Deep Neural Networks

Guillaume Alain, Nicolas Le Roux, Pierre-Antoine Manzagol

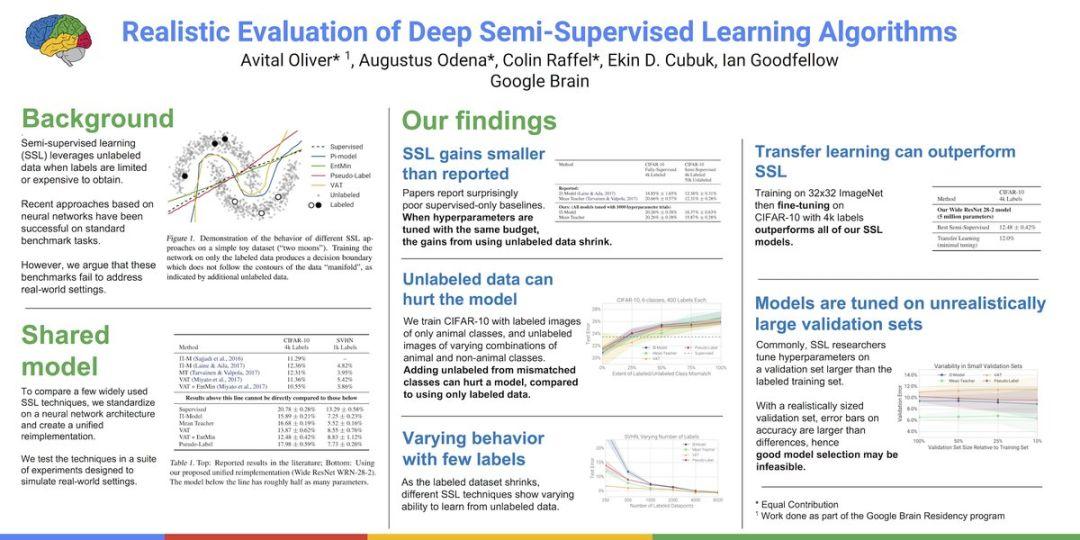

Realistic Evaluation of Semi-Supervised Learning Algorithms

Avital Oliver, Augustus Odena, Colin Raffel, Ekin Cubuk, lan Goodfellow

Winner’s Curse? On Pace, Progress, and Empirical Rigor

D. Sculley, Jasper Snoek, Alex Wiltschko, Ali Rahimi

Meta-Learning for Batch Mode Active Learning

Sachin Ravi, Hugo Larochelle

To Prune, or Not to Prune: Exploring the Efficacy of Pruning for Model Compression

Michael Zhu, Suyog Gupta

Adversarial Spheres

Justin Gilmer, Luke Metz, Fartash Faghri, Sam Schoenholz, Maithra Raghu,,Martin Wattenberg, Ian Goodfellow

Clustering Meets Implicit Generative Models

Francesco Locatello, Damien Vincent, Ilya Tolstikhin, Gunnar Ratsch, Sylvain Gelly, Bernhard Scholkopf

Decoding Decoders: Finding Optimal Representation Spaces for Unsupervised Similarity Tasks

Vitalii Zhelezniak, Dan Busbridge, April Shen, Samuel L. Smith, Nils Y. Hammerla

Learning Longer-term Dependencies in RNNs with Auxiliary Losses

Trieu Trinh, Quoc Le, Andrew Dai, Thang Luong

Graph Partition Neural Networks for Semi-Supervised Classification

Alexander Gaunt, Danny Tarlow, Marc Brockschmidt, Raquel Urtasun, Renjie Liao, Richard Zemel

Searching for Activation Functions

Prajit Ramachandran, Barret Zoph, Quoc Le

Time-Dependent Representation for Neural Event Sequence Prediction

Yang Li, Nan Du, Samy Bengio

Faster Discovery of Neural Architectures by Searching for Paths in a Large Model

Hieu Pham, Melody Guan, Barret Zoph, Quoc V. Le, Jeff Dean

Intriguing Properties of Adversarial Examples

Ekin Dogus Cubuk, Barret Zoph, Sam Schoenholz, Quoc Le

PPP-Net: Platform-aware Progressive Search for Pareto-optimal Neural Architectures

Jin-Dong Dong, An-Chieh Cheng, Da-Cheng Juan, Wei Wei, Min Sun

The Mirage of Action-Dependent Baselines in Reinforcement Learning

George Tucker, Surya Bhupatiraju, Shixiang Gu, Richard E. Turner, Zoubin Ghahramani,Sergey Levine

Learning to Organize Knowledge with N-Gram Machines

Fan Yang, Jiazhong Nie, William W. Cohen, Ni Lao

Online variance-reducing optimization

Nicolas Le Roux, Reza Babanezhad, Pierre-Antoine Manzagol

写在最后:欢迎大家点击下方二维码关注我们的公众号,点击干货资源专栏或回复关键字“资源”获取更多资源推荐。关注我们的历史文章,一起畅游在深度学习的世界中。

另外:欢迎大家扫码加入我们的qq群。

点击下方 | 阅读原文 | 了解更多

点击下方 | 阅读原文 | 了解更多

原创文章,作者:fendouai,如若转载,请注明出处:https://panchuang.net/2018/04/30/08e7bed3c4/